In the ever-evolving landscape of higher education, online, distance learning has emerged as a dynamic and accessible platform for students worldwide. However, with this shift to asynchronous online classrooms we must prioritize inclusivity and engagement in our educational strategies. Recognizing this need, Ecampus embarked on a journey to understand inclusive course design and teaching practices through the eyes of the learners.

Survey Summary

In 2021, Ecampus implemented an Inclusive Excellence Strategic Plan. One goal of this plan focused on enhancing inclusive teaching and learning in online courses. As part of this initiative, a pilot study was conducted during the academic year 2022-2023, to develop a mechanism for students to provide feedback on their learning experiences. The study employed a series of weekly surveys, designed to elicit responses regarding moments of engagement and distancing within online courses.

Administered across five Ecampus courses, the pilot study garnered responses from 163 enrolled students. The findings provide invaluable insights into the nuances of online learning design and offer actionable recommendations for educators seeking to cultivate inclusive excellence in their own asynchronous, online classrooms. The questions were as follows:

- At what moment (point) in class this week were you most engaged as a learner?

- At what moment (point) in class this week were you most distanced as a learner?

- What else about your experience as a learner this week would you like to share?

These questions were carefully crafted to elicit responses related to diversity, equity, and inclusion (DEI). By using the verbs “engaged” and “distanced,” students were prompted to reflect on moments of connection and disconnection within their learning environments. The open-ended nature of the questions allowed students to provide contextual feedback, offering valuable insights beyond the scope of predefined categories.

The results of the survey provide a multifaceted understanding of students’ experiences in online courses. Across all five courses, certain patterns emerged regarding elements that students found most engaging and most distancing. These insights served as a springboard for the development of actionable recommendations aimed at enhancing course design and fostering inclusive learning environments.

Alignment

One crucial area highlighted by the survey results was the importance of alignment. Students noticed when their courses had assessments that were aligned with course content, and they noticed when this alignment was missing. Ensuring that learning objectives are represented in instructional materials, practice activities, assessments, and evaluation criteria is key. For more on this, please see “Alignment” by Karen Watté from 2017.

Learning Materials

Another prominent theme in the survey responses was the overwhelming nature of long, uncurated lists of readings and learning materials, which tended to alienate learners. To address this, providing a reading guide or highlighting key points can alleviate feelings of overwhelm. Optimizing content presentation and learning activities emerged as a key factor in promoting engagement and inclusivity.

Incorporating interactive elements such as knowledge checks and practice activities within or between short lectures keeps students actively engaged and reinforces learning objectives. By utilizing multiple modes of content delivery–videos, lectures, and readings–educators can cater to diverse learning styles and preferences. Providing study guides is also noted as an effective strategy for enhancing comprehension and engagement with learning materials.

Community & Connection

Supporting student-to-student interaction is pivotal in fostering a sense of community and participation (Akyol & Garrison, 2008). Many learners noted that they enjoyed engaging in small group discussions, in fact 50% of students in one course noted that the week 1 introductory discussion was the point they felt most engaged. Additionally, students across the courses were excited to view and respond to the creative work of their peers. Community-building course elements like these foster a sense of community and collaboration within the virtual classroom.

While some students had mixed feelings about peer review activities–voicing concerns about feeling unqualified to judge their peer’s work–distinct guidelines and rubrics can empower learners to develop critical thinking, increase ownership, and enhance their communication skills. Thus, thoughtfully crafted peer review processes can also help to enhance the educational experience.

Authentic Activities

Incorporating authentic or experiential learning activities was also highlighted in student responses as a means of connecting course content to real-world scenarios. By integrating professional case studies, practical exercises, real-world applications, and reflective activities, educators can deepen students’ understanding of course material. Survey respondents noted again, and again how they felt engaged when coursework was relevant and applicable outside the classroom. This type of authentic work in courses can also increase learner motivation. (Gulikers, Bastiaens, & Kirschner, 2004)

Timely Feedback & RSI

By offering timely feedback on student work, online educators demonstrate their active presence and assist students in understanding the critical aspects of assessments, ultimately enhancing their chances of success. One student is quoted as saying,

“I really appreciate the involvement of the instructor. In the past I’ve had Ecampus classes where the teacher was doing the bare minimum and didn’t grade things until the last minute so I wasn’t even sure how I was doing in the class until it was almost over. I appreciate the speed at which things have been graded and the feedback I’ve already received. I appreciate the care put into announcements too!”

Timely feedback and time-bound announcements are also notable ways to showcase Regular and Substantive Interaction (RSI). Please also see “Regular & Substantive Interaction in Your Online Course” by Christine Scott.

Scaffolding

Another noteworthy recommendation from the survey findings was the importance of providing scaffolding and support throughout the course. Respondents expressed appreciation for feedback from peers and instructors to improve their writing. One student noted, “When I used my peer review feedback to improve my draft.” Offering additional resources and tutorials for unfamiliar or complex concepts ensures that all students have the support they need to succeed.Moreover, breaking down larger, high-stakes assignments into smaller, manageable tasks, can reduce feelings of overwhelm, provide a sense of accomplishment, increase early feedback and promote overall success.

Autonomy

Furthermore, offering choice and flexibility in assignments and assessments empowers students to take ownership of their learning journey. Whether it’s offering choice in topics, deliverable types, or exercise formats, providing students with agency fosters a sense of autonomy and engagement. One respondent noted, “I think choosing a project topic was the most engaging part of this week, because allowing students to research things that they are interested [in,] within some constraints is a good way to get them engaged and interested in the topics.”

Note on Survey Administration

One final take away from the study underscores the importance of thoughtful survey administration. While weekly surveys offer robust results, participating faculty indicated that surveying students every week was too frequent. Instead, it’s recommended to conduct surveys between one to three times throughout the course, striking a balance between gathering insights and respecting students’ time. Additionally, transparent communication about the purpose and use of student feedback is essential for fostering trust and eliciting honest responses. Students should understand that their feedback is valued and how it will be utilized to improve their learning experience in both the current term and future iterations of the course.

Conclusion

Engagement and inclusion in online education is multifaceted and ongoing. By listening to student feedback, implementing actionable recommendations, and fostering a culture of continuous improvement, educators can create transformative learning experiences that empower students to thrive in the digital age. Together, let us embark on this journey towards inclusive excellence, ensuring that every learner has the opportunity to succeed while feeling valued, supported, and empowered to reach their full potential.

References

Akyol, Z., & Garrison, D. R. (2008). The development of a community of inquiry over time in an online course: Understanding the progression and integration of social, cognitive and teaching presence. Journal of Asynchronous Learning Networks, 12(3-4), 3-22. 10.24059/olj.v12i3.72

Gulikers, J.T.M., Bastiaens, T.J. & Kirschner, P.A. (2004). A five-dimensional framework for authentic assessment. ETR&D 52, 67–86. https://doi.org/10.1007/BF02504676

Scott, C. (2022, November 7). Regular & Substantive Interaction in Your Online Course. Ecampus Course Development & Training Blog.https://blogs.oregonstate.edu/inspire/2022/11/07/regular-substantive-interaction-in-your-online-course/

Watté, K. (2017, January 27). Alignment. Ecampus Course Development & Training Blog. https://blogs.oregonstate.edu/inspire/2017/01/27/alignment/

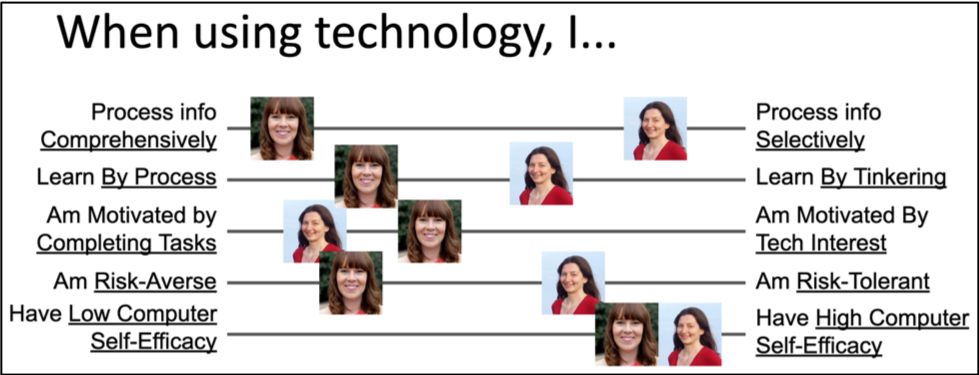

I recently volunteered to lead a book club at my institution for staff participating in a professional development program focused on leadership. The book we are using is The 9 Types of Leadership by Dr. Beatrice Chestnut. Using principles from the enneagram personality typing system, the book assesses nine behavioral styles and assesses them in the context of leadership.

I recently volunteered to lead a book club at my institution for staff participating in a professional development program focused on leadership. The book we are using is The 9 Types of Leadership by Dr. Beatrice Chestnut. Using principles from the enneagram personality typing system, the book assesses nine behavioral styles and assesses them in the context of leadership. I didn’t have a clear-cut answer but I recognized a strong desire to communicate this new-found awareness to others. My first thought was to find research articles. Google Scholar to the rescue! After a nearly fruitless search, I found two loosely-related articles. I realized I was grasping at straws trying to cull out a relevant quote. I had to stop myself; why did I feel the need to cite evidence to validate my incident? I was struggling with how to cohesively convey my thoughts and connect them in a practicable, actionable way to my job as an instructional designer. My insight felt important and worth sharing via this blog post, but what could I write that would be meaningful to others? I was stumped!

I didn’t have a clear-cut answer but I recognized a strong desire to communicate this new-found awareness to others. My first thought was to find research articles. Google Scholar to the rescue! After a nearly fruitless search, I found two loosely-related articles. I realized I was grasping at straws trying to cull out a relevant quote. I had to stop myself; why did I feel the need to cite evidence to validate my incident? I was struggling with how to cohesively convey my thoughts and connect them in a practicable, actionable way to my job as an instructional designer. My insight felt important and worth sharing via this blog post, but what could I write that would be meaningful to others? I was stumped! Who Cares? I Do

Who Cares? I Do