About halfway through earning a master’s in education, I took a summer session class on digital storytelling. It ran over the course of three half-day sessions during which we were required to complete two digital stories. I had no great academic ambitions in my approach to these assignments. I was trying to satisfy a degree requirement in a way that worked with my schedule as a single mother of two teenagers working full time while earning a graduate degree.

My first story was a self-introduction. I loved this assignment. Even though I had one evening to complete it, I spent hours tweaking it. I enjoyed learning the tools. I enjoyed sharing my story with my classmates. Even after it was graded, I kept finding ways to improve it.

After completing the course, I began to study the use of digital stories in education. My personal experience had shown me that in completing my assignment I had to become comfortable with technology as well as practiced my writing, speaking and presentation skills. I also felt a stronger connection to my classmates after sharing my video and watching their videos.

Literature

The research on digital storytelling echoes my own experience. Dr. Bernard Robin, an Associate Professor of Learning, Design, & Technology at the University of Houston, discussed the pedagogical benefits of digital storytelling assignments in a 2016 article, The Power of Digital Storytelling to Support Teaching and Learning. His research found that both student engagement and creativity increased in higher education courses when students were given the opportunity to use multimedia tools to communicate their ideas. Students “develop enhanced communication skills by learning to organize their ideas, ask questions, express opinions, and construct narratives” (Robin, 2016). Bernard’s experience also finds that by sharing their work with peers, students learn to give and accept critique, fostering social learning and emotional intelligence.

Digital Storytelling as Educators

Digital Storytelling in online education shouldn’t be thought of as only a means of creating an engaging student assignment. Educators who are adept at telling stories have a tremendous advantage in capturing their student’s attention. In the following short video, Sir Ian McKellen shares why stories have so much power. Illustrated in the form of a story, he shares that stories are powerful for four reasons. They are a vessel for information, create an emotional connection, display cultural identity, and gives us happiness.

McKellen is a compelling narrator with a great voice. This story is beautifully illustrated. It reminds me of how I want my learners to feel when they are consuming the content I create. Even if for a moment, so engrossed, that they forget that they are learning. Learning becomes effortless. As he points out, a good storyteller can make the listener feel as if they are also living the story.

Digital Storytelling Assignments

There are lots of ways to integrate digital stories across a broad set of academic subjects. Creating personal narratives, historical documentaries, informational and instructional videos or a combination of these styles all have educational benefits. One of the simplest ways to introduce this form of assessment to your course is to start with a single image digital story assignment.

Here’s an example I created using a trial version of one of many digital story making tools available online:

Digital Story Making Process

The process of creating a digital story lends itself well for staged student projects. Here’s an example of some story making stages:

- Select a topic

- Conduct research

- Find resources and content

- Create a storyboard

- Script the video

- Narrate the video

- Edit the final project

I created an animated digital story to illustrate the process of creating a digital story using another freely available tool online.

Recommended Resources & Tools

You will find hundreds of tools available for recording media with a simple search. Any recommended tool should be considered for privacy policies, accessibility and cost to students.

Adobe Express (previously Adobe Spark)

Adobe offers a free online video editor which provides easy ways to add text, embed videos, add background music and narration. The resulting videos can be easily shared online via a link or by downloading and reposting somewhere else. While the tool doesn’t offer tremendous flexibility in design, the user interface is very friendly.

Canvas

Canvas has built-in tools to allow students to record and share media within a Canvas course. Instructions are documented in the OSU Ecampus student-facing quick reference guide.

Audacity

Audacity is a free, open-source cross-platform software for recording and editing audio. It has a steeper learning curve than some of the other tools used for multimedia content creation. It will allow you to export your audio file in a format that you can easily add to a digital story.

Padlet

Padlet allows you to create collaborative web pages. It supports lots of content types. It is a great place to have students submit their video stories. You have a lot of control during setup. You can keep a board private, you can enable comments, and you can choose to moderate content prior to posting. Padlet allows for embedding in other sites – and the free version at the time of writing allows users to create three padlets the site will retain.

Storyboarding Tools

A note first about storyboarding. Storyboarding is an essential step in creating a digital story. It is a visual blueprint of how a video will look and feel. It is time to think about mood, flow and gather feedback.

Students and teachers alike benefit from visualizing how they want a final project to look. It doesn’t have to be fancy. It is much easier to think about how you want a shot to look at this stage than while you are shooting and producing your video. A storyboard is also a good step in a staged, longer-term project in a course to gauge if students are on the right track.

Storyboard That

This is a storyboard creation tool. The free account allows for three and six frame stories. In each frame, you can choose from a wide selection of scenes, characters, and props. Each element allows you to customize color, position, and size. Here’s a sample I created:

The Boords

This site has several free to use templates in multiple formats to support this process. Here is one that I have used before:

A4-landscape-6-storyboard-template

Looking for Inspiration?

Start with Matthew Dicks. Dicks is the author of Storyworthy: Engage, Teach, Persuade and Change Your Life through the Power of Storytelling. He is a teacher. He is a five-time winner of the Moth GrandSlam championship.

His book is wonderful, but to just get a taste, start with the podcast he cohosts with his wife. Each week they include a well-vetted and rehearsed story told during a competition. They then highlight the strengths and areas for improvement. You will walk away with ideas and the motivation to become a better storyteller. Here’s the first episode, and one of my favorites.

Conclusion

When pressed for time to develop course content, we tend to over-rely on text-based assignments such as essays and written discussion posts. Students, when working on Digital Storytelling assignments, get the opportunity to experiment, think creatively and practice communication and presentation skills.

For educators, moving away from presenting learning materials in narrated bulleted slides is likely to make classes more engaging and exciting for their students leading to better learning outcomes. Teachers work every day to connect with students and capture their attention. A good story can inspire your students and help them engage with the content.

I was uncomfortable when I received my first digital storytelling assignment. I didn’t really know how to use the tools, wasn’t confident I knew how or what to capture. I was sure it would feel awkward to narrate a video. But These assignments turned out to be engaging, meaningful, and the process is pretty straight forward. Introduce digital storytelling into your courses, even by starting small, and you are sure to feel the same way.

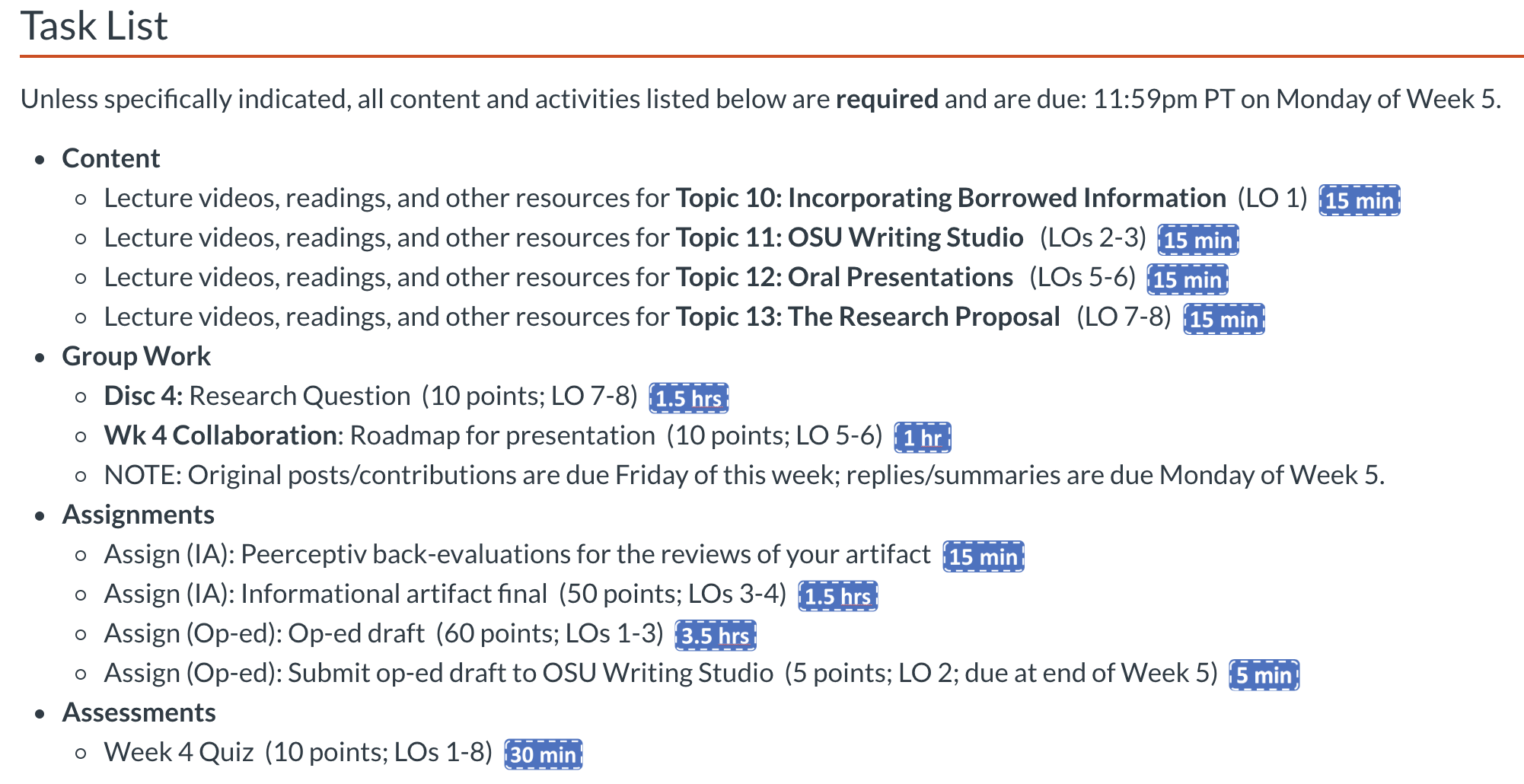

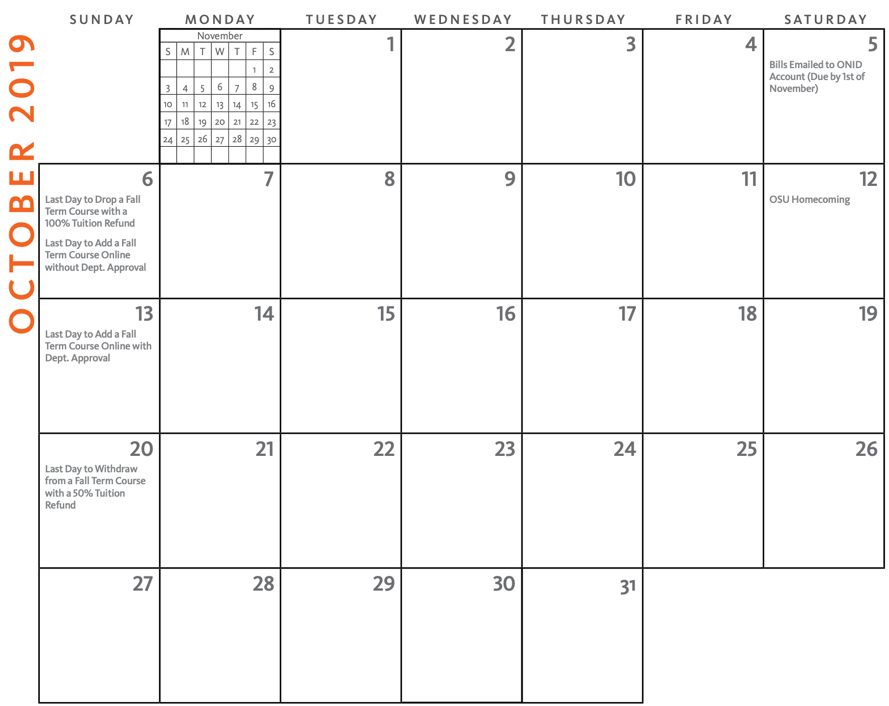

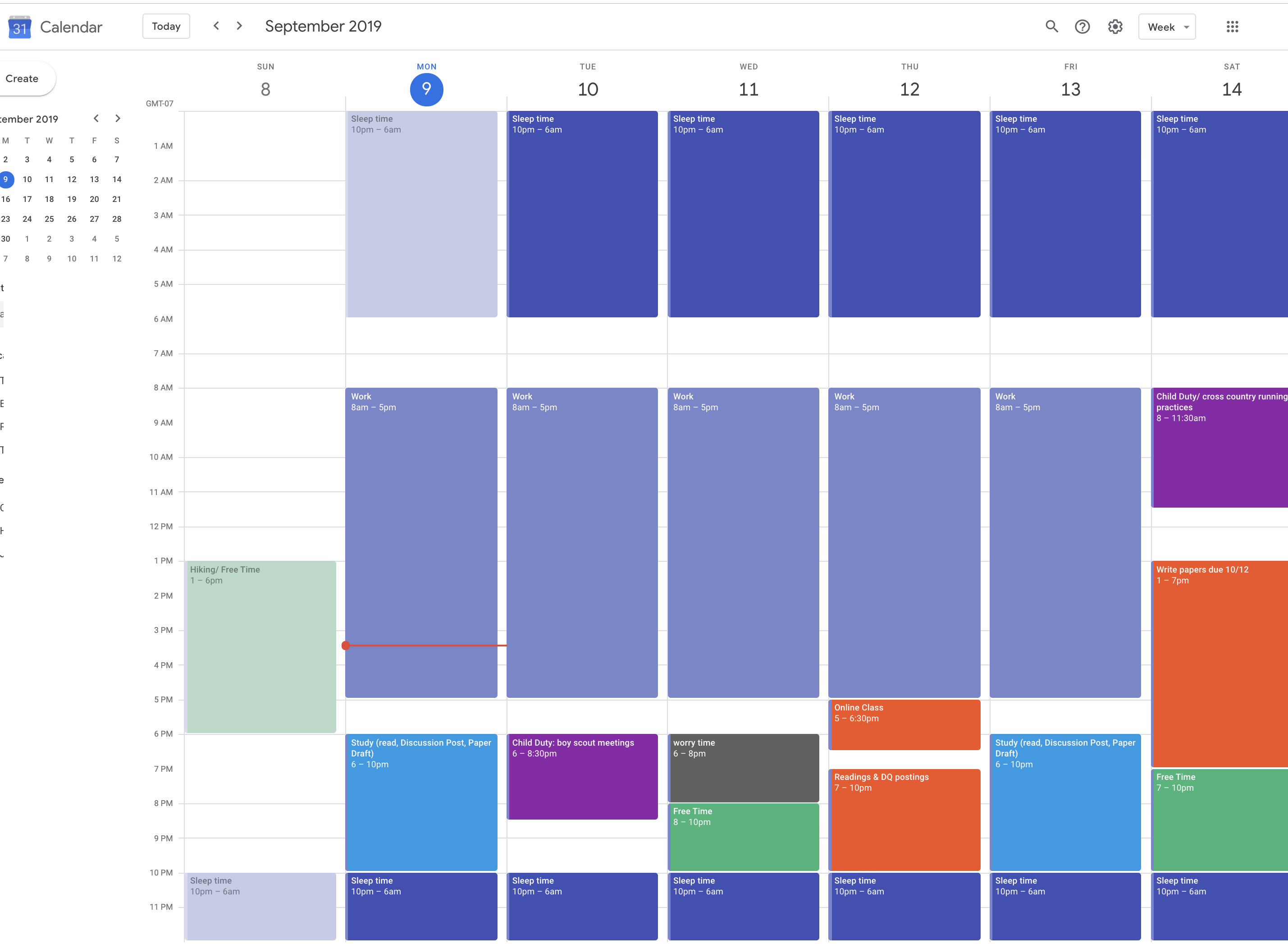

Have you ever taken a trip with a tour group? Or looked at an itinerary of places and activities to see if it meets your expectations and/or fits into your schedule? Most guided tours include an itinerary with a list of destinations, activities, and time allotted. This helps travelers manage their expectations and time.

Have you ever taken a trip with a tour group? Or looked at an itinerary of places and activities to see if it meets your expectations and/or fits into your schedule? Most guided tours include an itinerary with a list of destinations, activities, and time allotted. This helps travelers manage their expectations and time.

Is blended learning right for your discipline or area of expertise? If you want to give it a try, there are many excellent internet resources available to support your transition.

Is blended learning right for your discipline or area of expertise? If you want to give it a try, there are many excellent internet resources available to support your transition.