Sept. 29, 2021

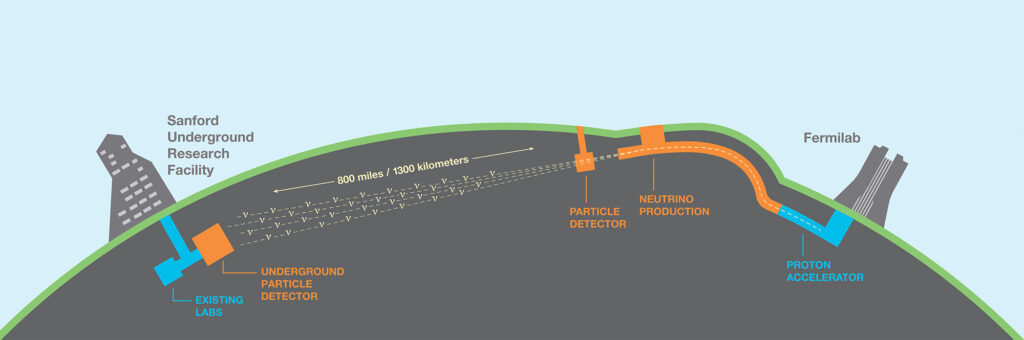

Oregon State Physics is leading a Department of Energy Office of Science funded project to design computing and software infrastructure for the DUNE experiment. DUNE is a future neutrino experiment that will aim a neutrino beam from Fermilab, in Batavia Illinois, at a very large detector in the Homestake mine in Lead, South Dakota. The experiment is currently under construction with a 5% prototype running at CERN in 2018 and 2022 and the full detector expected in 2029. These experiments generate data at rates of 1-2 GB/sec, or 30 PB/year which must be stored, processed and distributed to over 1,000 scientists worldwide.

The project “Essential Computing and Software Development for the DUNE experiment” is funded for 3M$ over 3 years, shared among 4 Universities (Oregon State, Colorado State, Minnesota and Wichita State) and three national laboratories (Argonne National Laboratory, Fermi National Laboratory and Brookhaven National Laboratory). The collaborators will work with colleagues worldwide on advanced data storage systems, high performance computing and databases in support of the DUNE physics mission. See https://www.dunescience.org/ for more information on the experiment.

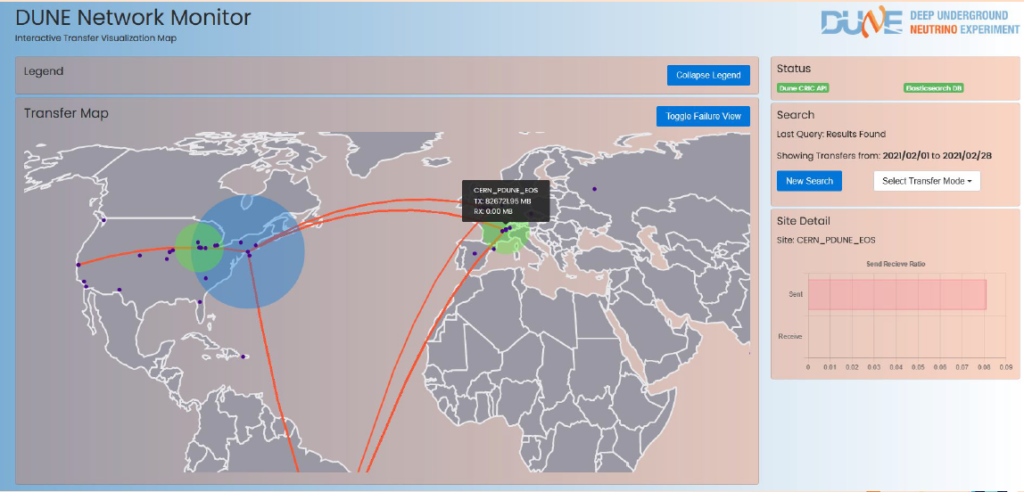

PI Heidi Schellman (Oregon State Physics) leads the DUNE computing and software consortium which is responsible for the international DUNE computing project. Physics graduate student Noah Vaughan helps oversee the global grid processing systems that DUNE uses for data reconstruction and simulation and recent graduate Amit Bashyal helped design the DUNE/LBNF beamline. Graduate student Sean Gilligan is performing a statistical analysis of data transfer patterns to help optimize the design of the worldwide data network. Postdoc Jake Calcutt recently joined us from Michigan State University and is designing improved methods for producing data analysis samples for the ProtoDUNE experiment at CERN.

One of the major thrusts of the Oregon State project is the design of robust data storage and delivery systems optimized for data integrity and reproducibility. 30 PB/year of data will be distributed worldwide and processed through a complex chain of algorithms. End users need to know the exact provenance of their data – how was it produced, how was it processed, was any data lost – to ensure scientific reproducibility over the decades that the experiments will run. Preliminary versions of the data systems have already led to results from the protoDUNE prototype experiments at CERN which are described in https://doi.org/10.1088/1748-0221/15/12/P12004 and https://doi.org/10.1051/epjconf/202024511002.

As an example of this work, three Oregon State Computer Science Majors (Lydia Brynmoor, Zach Lee and Luke Penner) worked with Fermilab scientist Steven Timm on a global monitor for the Rucio storage system shown below. This illustrates test data transfers between compute sites in the US, Brazil and Europe. The dots indicate compute sites in the DUNE compute grid while the lines illustrate test transfers.

Other projects will be a Data Dispatcher which optimizes the delivery of data to CPU’s across the DUNE compute systems and monitoring of data streaming between sites.