[This is part of our series of Research Advancing Pedagogy (RAP) blogs, designed to share the latest pedagogical research from across the disciplines in a pragmatic format]

The word metacognition is bandied around a bit. If you have been paying attention to the output of cognitive science it would be a hard one to miss. It is not often that you get to see a through measure of it in a classroom setting. One recent classroom based study ambitiously measured many different elements of metacognition and some key other factors as well. Here is what they did. Be prepared, there are some surprises in here with important implications for what students should know about this concept.

What did they do? In one of the most comprehensive studies of metacognitive behaviors, Hong and colleagues (2020) examined the study behaviors and exam scores of 1,326 undergraduate students. The students agreed to have they class information be used and took a large face-to-face biology course. The study spanned semesters over two years and was a basic introduction, designed to serve as prerequisite for upper level health science courses.

The students completed one survey at the beginning of each semester and this information was used to predict their exam scores in the class. The survey was hosted on their learning management system (LMS). CANVAS, BlackBoard, and Desire2Learn allow something similar too. The survey was used to collect basic information such as age, ethnicity, and year in school, and also the key variables of interest in the study. The researchers measured motivational variables including self-efficacy, achievement goals, task value, and cost. In a seemingly sneaky component, the students’ learning behaviors were scrutinized. The researchers got access, also with student permission, to the logs of student accesses to digital resources on the course site and collected exam scores after each semester. Students took four exams on paper. Each exam was multiple-choice and also had a few short essays.

Curious as to how the researchers measured metacognition? The researchers tapped into students’ metacognitive monitoring processes by looking at how and when students looked at the syllabi and study guides. These materials focus students on key content to be learned and specify the level of understanding needed for each content unit assessed by an exam. These can be used for planning study. Students could also monitor their learning by completing ungraded self-assessment quizzes. These low stakes, well no

stakes assignments gave students a way to rehearse knowledge and obtain correctness feedback. Students could also monitor performance by checking grades (i.e., clicking on My Grades to view current points earned). In short, the LMS traces which monitoring tools the students used by capturing the frequency of students’ accesses. Notice how the key components of self-regulation– planning, monitoring, assessing- are all available for students in your LMS.

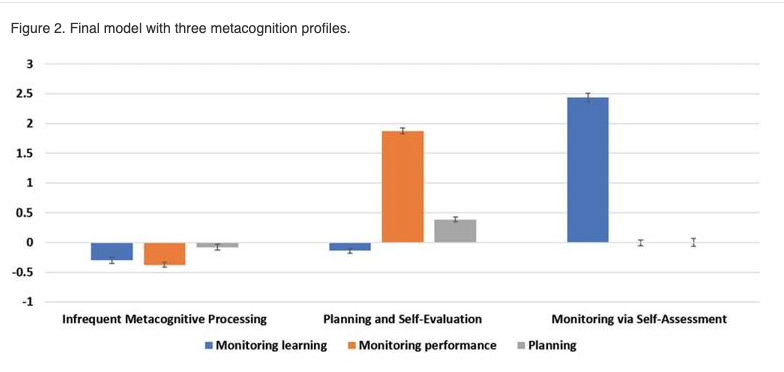

What did they find? The complete study has a lot of interesting findings regarding how motivation is related to metacognition, but for our purposes, let’s hone in on planning and monitoring. The researchers found that students in the class could be divided up into two main groups. The first was a group only practiced self-assessment through repeated monitoring of their performance on ungraded quizzes. These quizzes were designed to also students to judge whether they knew the content. What is curious about this set of students is that they focused on self-assessment quizzes alone, almost not engaging in other available metacognitive processes.

A second group of students engaged only in planning using exam resources and checking of grades, with nearly zero self-assessment quizzing behaviors. Self-regulation gurus, would expect that the different types of metacognitive behaviors- planning, monitoring, and judging – would be highly related, but that was not the case.

The proof of the pudding? The groups that showed metacognitive activity did better on their exams (see Figure 2 below). Low scores in the group showing little metacognitive processing is not surprising. We told you so. It is odd that the real life classroom data showed a separation of planning and evaluation from monitoring via self-assessment, where the two groups also performed differently on the exams.

What does this mean for us? Sadly, the majority of the students in this study (75%) made little use of LMS resources designed to aid their metacognition. More students need to know that everything they do on their course sites can be monitored. We instructors can tell when they looked at a page, how long they looked at it, when they took a quiz, and a whole host of other factors. The next time students go into their LMS (or think of putting it off), they should remember their action and inaction is visible to their professors. Perhaps knowing this information students may be less likely to put off use, but more importantly, perhaps they can leverage the tools at their fingertips to improve their metacognition to plan, monitor, and evaluate their own learning.

Leave a Reply