Welcome to the future

The picture above is a thing of beauty. This is what is called a System on a Chip or SoC. This chip has been created to power the newest line of flagship laptops from Apple Computers. This chip is called the M1 Max and is based on technology that apple has used in their cell phones since the early 2000’s.

The chip architecture that powers Apple’s iPhones is different from what traditionally powered our laptops and desktop computers. This architecture is called Reduced Instruction Set Computer or RISC. This architecture is designed on the philosophy that instead of having very complicated specialized hardware that responds to specialized instructions, you design a processor to only have a few functions. This has a few major effects.

First, lets talk about hardware. A typical computer central processing unit uses a Complex Instruction Set Computer architecture or CISC. For specific problems a CISC might have special hardware built its chip that allows that specific type of computation to happen very quickly. This sounds great except, these extra functions and hardware may not ever even be used. This means there may be large areas of the chip’s footprint that are wasted on specialized hardware that is designed to be used only by a complex instruction set. If the same footprint is used to create a RISC style chip, more of that footprint can be used to create functional hardware that is used all the time. So if we have a transistor budget of say 10 billion transistors, we can more effectively use those 10 billion transistors for general purpose computing if we use a RISC style chip. Now it might be more clear why these types of chips would be used as a cell phones CPU.

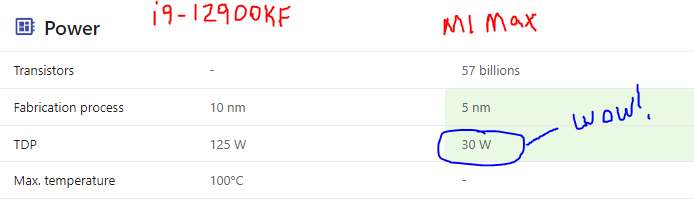

Secondly, lets talk about heat. Since a RISC chip requires less transistors to fulfill all the same functionality of a CISC chip. RISC chips can actually have a much lower transistor count and still perform similar on general computation tasks. With a RISC chip’s potential lower transistor count we can reduce the amount of heat generated and power consumed when compared to CISC chips. This is another huge advantage to devices where heat and power consumption are major factors.

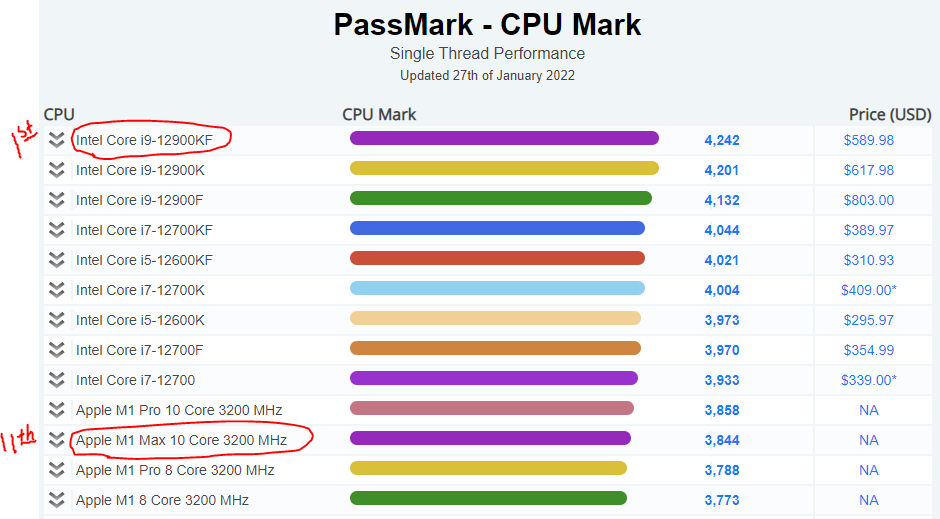

Up until recently RISC chips were seen to be the efficient alternatives to CISC chips and were seen as special use case. CISC chips were seen as the real power houses the could be used for gaming and serious computation tasks. While CISC chips ruled the world, Apple continued to develop the chips for the iPhone one generation at a time, until they became so fast and so efficient they began to actually compete with desktop grade CISC chips in performance and absolutely crush them in efficiency. Their performance and efficiency became so good, that eventually Apple decided to create a laptop line of computers that no longer use CISC chips but instead their own in house designed RISC chips that are based on their latest iPhone and iPad chips. Check out this graph, pretty amazing right?

This leads me back to the M1 Max whose picture is featured at the top of the page. Apple managed to take their cell phone RISC chips and upscale them to power a full fledge laptop. Not only did they manage to power it, but they managed to beat almost everyone in performance and efficiency at the same time. This would be like having a extremely high performance car that also gets much better gas mileage than everyone else. They managed to create the 11th fastest single thread performing CPU ever, all while using a fraction of the power.

Now check out the power usage difference:

Since traditional CPU’s in computers are separated from memory, there is latency and bandwidth problems that arise from the longer distance, and dependence on 3rd party hardware to facilitate communication.( motherboard buses) This is also true when dealing with discrete GPU’s. Hardware vendors have worked around this issue by putting memory directly on discrete GPU’s, thus improving bandwidth and noise issues.

Now Apple on the other hand has instead opted to put everything on one chip, this is called a SoC or system on a chip. This allows for very high bandwidth between memory and CPU, GPU, NPU(Neural Processing Unit) and a host of other hardware devices like specialized video encoders. You may say…. ‘so what’, well here is why that is awesome. The M1 line of chips has what is called ‘Unified Memory’, meaning the CPU and GPU and all other processors on the SoC have direct access to the same memory. This is a huge step forward for computing because, instead of having separate memory for CPU and GPU now they can share the same resources and have extremely high bandwidth memory access. This is also a break through because an optional 64 Gigabytes of memory is available! At first you may say ‘so what’ again, but keep this in mind, the memory on a graphics card is the main limitation for what types of models and datasets can be handled during machine learning. The more memory the better. An extremely expensive RTX 3090 desktop grade discrete GPU from Nvidia only has 24 Gb of memory, meaning a small compact Mac Book Pro can train using a larger dataset than a specialized graphics card, a lot larger!

In conclusion, I am obviously pumped up about the breakthroughs Apples chip development teams have produced. The bar has been raised so much higher in so many dimensions of performance that other chip manufactures are being forced to take notice or RISC 😉 becoming a historical footnote.

Here is a cool paper on RISC vs. CISC that also mentions how much cheaper RISC chips are to design and produce due to their simplicity.

https://ijcat.com/archives/volume5/issue7/ijcatr05071015.pdf

Thanks for tuning into another episode of … Computer Talk.

Don’t forget to rate, subscribe and hit that bell. (Sorry couldn’t resist)