we're in week 2 of putting together our vr simulation. it's becoming more manageable as time goes on. i tried wit.ai and dialogflow for speech recognition before i settled with microsoft's speech sdk for unity.

the basic idea is that i need to be able to do things in-game based on the user saying a correct phrase. the first step was to get voice recognition into a unity project.

with the speech sdk i was finally able to use a script that would start when i pressed a button, listen to what i say through my microphone, and output it to a text object in the game. i got this working with a physics-based button as well as with a floating button that you would see in most vr games.

the next step was to see if i could modify connect this script to another, and to modify something when a specific phrase is spoken. i also changed the language that would be listened for.

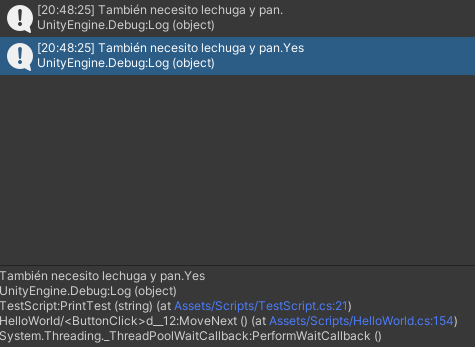

i added "yes" to the message returned by the speech sdk when i spoke the phrase "tambien necesito lechuga y pan." then i had it print through another script on another object in the scene, which is what i assume will need to happen in a game like this anytime we interact with another npc. so i'm getting closer to what i need.

my next step is to try this out on placeholders for npcs that will be in our game and see if i can get more complex things to happen. eventually this will mean that once a correct phrase is spoken the scene will continue. or if an incorrect phrase is spoken, the npc and ui will indicate that the player needs to try again.