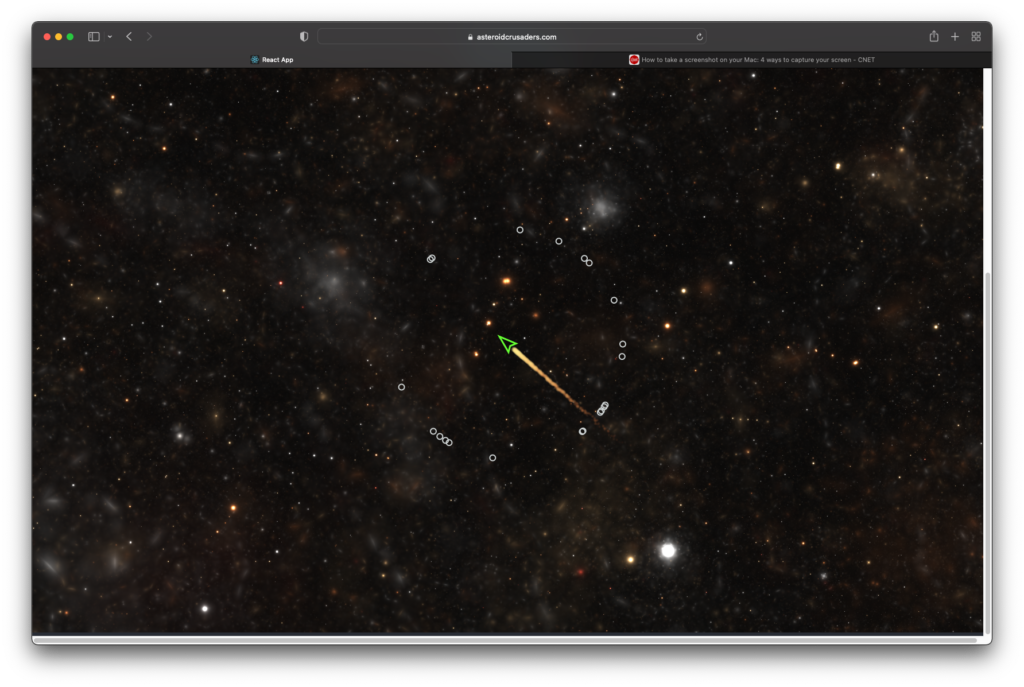

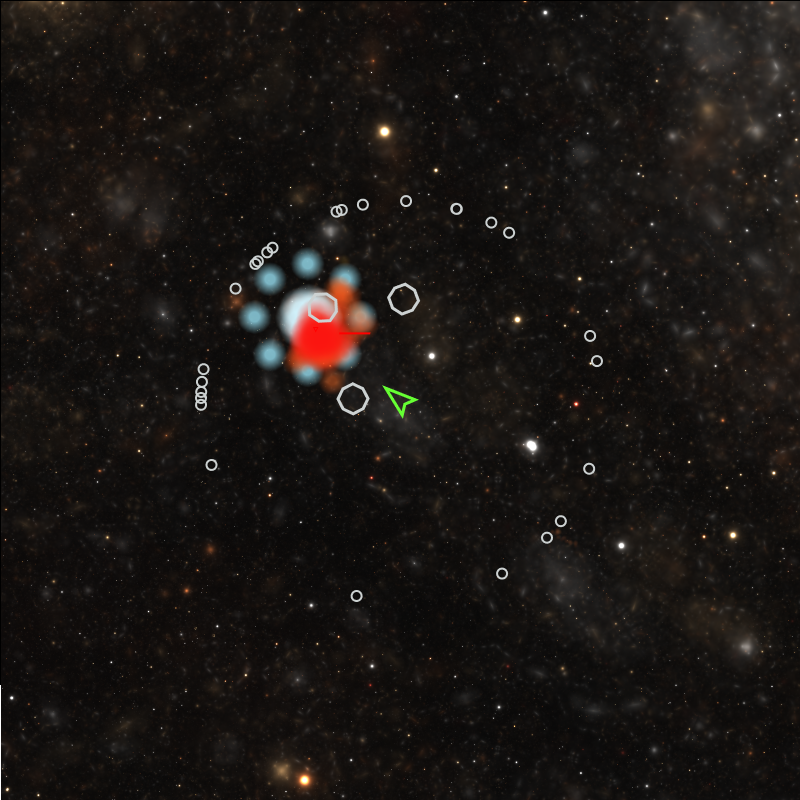

Just like that, we’re already wrapping up week 8 of our 10 (more like 9) week project. We’ve made pretty good progress on Asteroid Crusaders, and although we’re not exactly 80% done, next week still has great promise for productivity and I’m feeling a little reflective. While not the biggest project I’ve worked on, Asteroid Crusaders may rank among the more complex, with many different parts that have to come together and some mind bending simultaneous programming in a curly-brace and semicolon language next to a whitespace language. Part of the complexity arose due to the fact that we had to come up with the overall design of the project in a relatively short amount of time and then had a fairly tight time budget to get it all implemented. In other words, no time to step back and reevaluate the approach. And that’s how I wound up doing something I knew I would never want to do: write a game in Javascript.

One of the interesting things about programming is that at any given point in the coding process we are redoing something that has already been done a thousand times before, and likely been done better. There is nothing new in Asteroid Crusaders, especially regarding the theme, and the implementation is just the combination of tons of little posts of stack overflow (noted in the code where reliance was particularly heavy). It’s only in the sum of the parts that the originality comes into focus, hopefully. And now that I have the great advantage of hindsight, I know one thing is certain: I should have leveraged much more of that sweet code perfected by someone else.

I can’t even begin to enumerate the code written by others that went into our version of Asteroids, starting with Python and JS and all the way down into multitudes of libraries. But there are some things I did myself: I wrote a websocket server in python using TCP sockets. I wrote a (very simple) physics simulation in Python. I wrote a game in Javascript.

At the start of the project a significant decision was made on how to implement the WebGL layer. We had the option of using raw WebGL and all that fun linear algebra or using a library. We went with PIXI JS, a 2d focused WebGL library. I think it is actually written in TypeScript, which makes a lot of sense. But I started using it with Javascript, and by about week 3 when I started to realize this was a Bad Idea, there was no time to go back.

We are supposed to spend about 10 hours per week on this project. Today I ran into a bug, client side. I was trying to add a new feature which involved some changes that broke a previously working feature. After some time I found one specific line of code was responsible for the break – I could comment it out and the problem would arise, or I could leave it in and things would work fine. The problem was that this line of code made no sense.

5 hours later…

Maybe you might realize where this is going. That line of code was indeed broken, but it was stopping the rest of the function from executing, which caused the bug. Thanks Javascript for your ‘stay alive at all costs’ attitude. And that’s why next time I do this I am going to use Unity – AAA game engine, built in WebGL export, statically-typed, built-in networking.