Neural Networks, and machine learning in general, contain a lot of very scary mathematic sounding terminology. But after having studied them for a few weeks now, they are actually quite easy to understand once you get past the vocabulary. This week’s topic will be focused on Neural Networks, and how science can imitate nature to create some awesome things!

Artificial Neural Networks (which will be referred to as NNs) are a supervised machine learning model inspired by biological neural networks. Artificial Neural Networks are made up of a collection of nodes called artificial neurons, which loosely model the neurons of a biological brain. Each artificial neuron can receive a signal, process it, and then signal all other neurons connected to it. In machine learning sense, this ‘signal’ is a real number, and the output of each neuron is computed by some linear or non-linear function on the sum of its inputs. Each connection is called an edge, and each neuron / edge pair has a weight which is adjusted as the artificial NN model is trained.

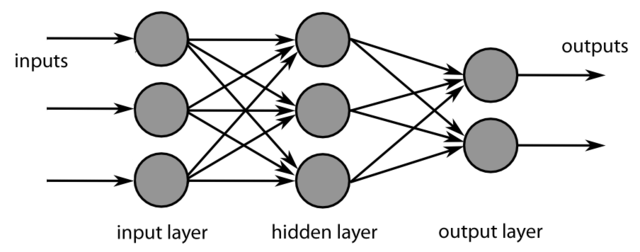

NN models are typically architected as a collection of neurons grouped into layers. The layers are organized from left to right, with the left-most layer called the input layer and the right-most layer called the output layer. The input layer represents the input feature set by containing a neuron for each feature. The output layer represents the generated output from the learning model, and typically contains two neurons selected for use in this learning model. It contains neurons for each possible classification output and an attached probability of that output.

The layers in the middle are known as the ‘hidden layers’. They comprise most of the neurons in the model and are the secret to the data manipulation to get a desired output. Each neuron in a layer receives an input from every neuron in the previous layer and provides it’s output to every neuron in the next layer. Each neuron takes the mass of input data, passes it through a mathematical ‘activation function’, and then passes this output to the next layer.

The activation function is a function that helps the NN learn complex patterns in the data and adjusts the weights of each neuron based on the affective rate of change it has on an output. These functions can be linear or non-linear, depending on if the NN model is supposed to generate a continuous (i.e. predict a stock price) or discrete (i.e. is this a picture of an apple) output. A commonly used activation function is the Sigmoid Function which returns an output between 0 and 1.

As the activation layer helps to consume inputs and adjust weights between layers, you may be wondering how the initial set of weights for each input feature is selected. This can be randomly selected at first to help the model consume a first series of information. These initial input weights are then adjusted via several methods.

If you can represent the problem you are trying to solve via a differentiable objective function then you can use a process known as ‘back propagation’ which will retrain a neural network model, while optimizing the input weights in a way that reduces the most amount of error in the output layer. A typical back propagation method may use something like a mean-square error calculation to find ways to reduce error with the input weights.

If your objective function is non-differentiable, then things get a bit more complicated. One of the most well-known solutions to this issue is to use a genetical machine learning algorithm to train the NN. A genetic algorithm would begin by creating a set of randomly selecting input weights, a set meaning it will train multiple version of your Neural Network. It will then check the accuracy of each NN, select the best results, and will generate another set of inputs based on some mutation / splicing of inputs from the best results (essentially creating the ‘next’ generation of inputs from a set of parent inputs). Genetic algorithms follow an evolutionary model to forming an optimized solution, which I think is pretty awesome.

Another method is through reinforcement training, a training model which mimics how real-life learning occurs. Essentially, the reinforcement model would begin by selecting a random set of inputs until it gets a decent result. It will then ‘learn’ from that input and generate an informed set of inputs based on that result. This cycle will continue until it generates an optimized solution. It acts similarly to the genetic algorithm, but is based on reinforcing ‘correct’ guesses at the optimized solution.

I’ll be implementing a neural network which is trained via a genetic algorithm over the next few weeks. The goal of this neural net will be to create a way to visualize some of the transformations happening in the hidden layers. Will check in with you guys shortly with a project report! Thanks for reading.