Have you ever been assigned a task but found yourself asking: “What’s the point of this task? Why do I need to do this?” Very likely, no one has informed you of the purpose of this task! Well, it likely was because that activity was missing to show a critical element: the purpose. Just like the purpose of a task can be easily left out, in the context of course design, a purpose statement for an assignment is often missing too.

Creating a purpose statement for assignments is an activity that I enjoy very much. I encourage instructors and course developers to be intentional about that statement which serves as a declaration of the underlying reasons, directions, and focus of what comes next in an assignment. But most importantly, the statement responds to the question I mentioned at the beginning of this blog…why…?

Just as a purpose statement should be powerful to guide, shape, and undergird a business (Yohn, 2022), a purpose statement for an assignment can guide students in making decisions about using strategies and resources, shape students’ motivation and engagement in the process of completing the assignment, and undergird their knowledge and skills. Let’s look closer at the power of a purpose statement.

What does “purpose” mean?

Merriam-Webster defines purpose as “something set up as an object or end to be”, while Cambridge Dictionary defines it as “why you do something or why something exists”. These definitions show us that the purpose is the reason and the intention behind an action.

Why a purpose is important in an assignment?

The purpose statement in an assignment serves important roles for students, instructors, and instructional designers (believe it or not!).

For students

The purpose will:

- answer the question “why will I need to complete this assignment?”

- give the reason to spend time and resources working out math problems, outlining a paper, answering quiz questions, posting their ideas in a discussion, and many other learning activities.

- highlight its significance and value within the context of the course.

- guide them in understanding the requirements and expectations of the assignment from the start.

For instructors

The purpose will:

- guide the scope, depth, and significance of the assignment.

- help to craft a clear and concise declaration of the assignment’s objective or central argument.

- maintain the focus on and alignment with the outcome(s) throughout the assignment.

- help identify the prior knowledge and skills students will be required to complete the assignment.

- guide the selection of support resources.

For instructional designers

The purpose will:

- guide building the structure of the assignment components.

- help identify additional support resources when needed.

- facilitate an understanding of the alignment of outcome(s).

- help test the assignment from the student’s perspective and experience.

Is there a wrong purpose?

No, not really. But it may be lacking or it may be phrased as a task. Let’s see an example (adapted from a variety of real-life examples) below:

Project Assignment:

“The purpose of this assignment is to work in your group to create a PowerPoint presentation about the team project developed in the course. Include the following in the presentation:

- Title

- Context

- Purpose of project

- Target audience

- Application of methods

- Results

- Recommendations

- Sources (at least 10)

- Images and pictures

The presentation should be a minimum of 6 slides and must include a short reflection on your experience conducting the project as a team.”

What is unclear in this purpose? Well, unless the objective of the assignment is to refine students’ presentation-building skills, it is unclear why students will be creating a presentation for a project that they have already developed. In this example, creating a presentation and providing specific details about its content and format looks more like instructions instead of a clear reason for this assignment to be.

A better description of the purpose could be:

“The purpose of this assignment is to help you convey complex information and concepts in visual and graphic formats. This will help you practice your skills in summarizing and synthesizing your research as well as in effective data visualization.”

The purpose statement particularly underscores transparency, value, and meaning. When students know why, they may be more compelled to engage in the what and how of the assignment. A specific purpose statement can promote appreciation for learning through the assignment (Christopher, 2018).

Examples of purpose statements

Below you will find a few examples of purpose statements from different subject areas.

Example 1: Application and Dialogue (Discussion assignment)

Courtesy of Prof. Courtney Campbell – PHL /REL 344

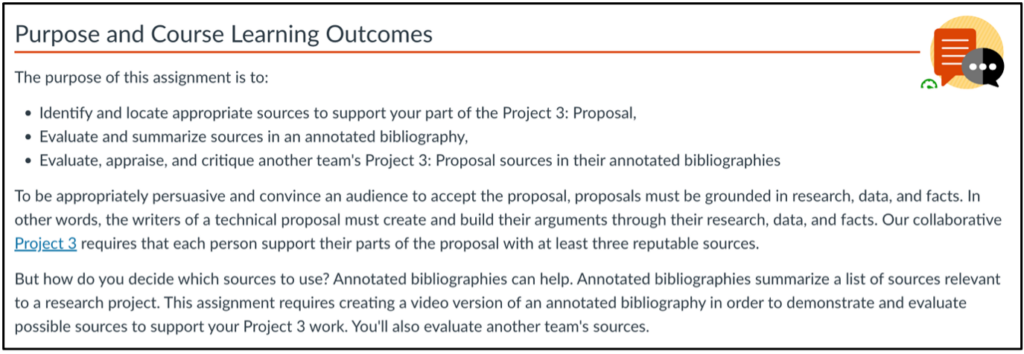

Example 2: An annotated bibliography (Written assignment)

Courtesy of Prof. Emily Elbom – WR 227Z

Example 3: Reflect and Share (Discussion assignment)

Courtesy of Profs. Nordica MacCarty and Shaozeng Zhang – ANTH / HEST 201

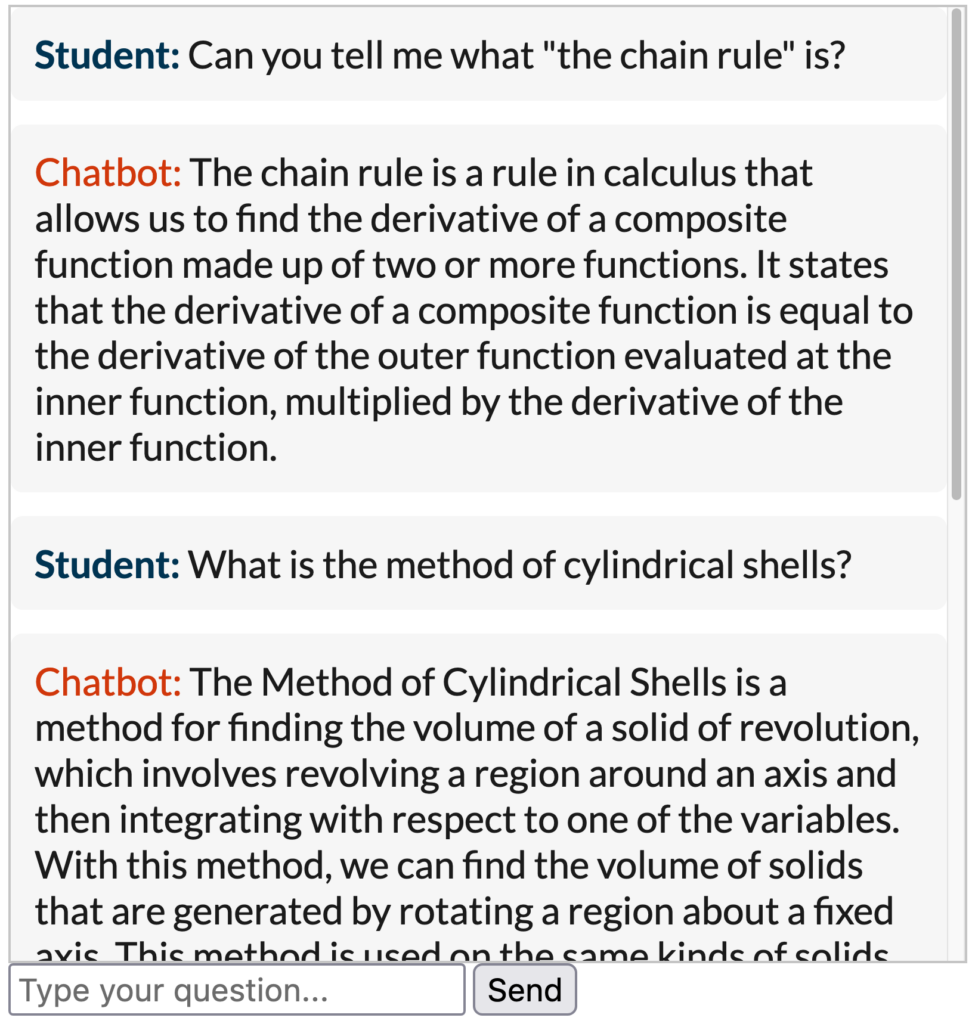

With the increased availability of language learning models (LLMs) and artificial intelligence (AI) tools (e.g., ChatGPT, Claude2), many instructors worry that students would resort to these tools to complete the assignments. While a clear and explicit purpose statement won’t deter the use of these highly sophisticated tools, transparency in the assignment description could be a good motivator to complete the assignments with no or little AI tools assistance.

Conclusion

“Knowing why you do what you do is crucial” in life says Christina Tiplea. The same applies to learning, when “why” is clear, the purpose of an activity or assignment can become a more meaningful and crucial activity that motivates and engages students. And students may feel less motiavted to use AI tools (Trust, 2023).

Note: This blog was written entirely by me without the aid of any artificial intelligence tool. It was peer-reviewed by a human colleague.

Resources:

Christopher, K. (02018). What are we doing and why? Transparent assignment design benefits students and faculty alike. The Flourishing Academic.

Sinek, S. (2011). Start with why. Penguin Publishing Group.

Trust, T. (2023). Addressing the Possibility of AI-Driven Cheating, Part 2. Faculty Focus.

Yohn, D.L. (2022). Making purpose statements matter. SHR Executive Network.