Clara Bird1 and Karen Lohman2

1Masters Student in Wildlife Science, Geospatial Ecology of Marine Megafauna Lab

2Masters Student in Wildlife Science, Cetacean Conservation and Genomics Laboratory

In a departure from my typical science-focused blog, this week I thought I would share more about myself. This week I was inspired by International’s Woman’s Day and, with some reflection on the last eight months as a graduate student, I decided to look back on the role that coding has played in my life. We hear about how much coding can be empowering but I thought it might be cool to talk about my personal experience of feeling empowered by coding. I’ve also invited a fellow grad student in the Marine Mammal Institute, Karen Lohman, to co-author this post. We’re going to briefly talk about our experience with coding and then finish with advice for getting started with coding and coding for data analysis.

Our Stories

Clara

I’ve only been coding for a little over two and a half years. In summer 2017 I did an NSF REU (Research Experience for Undergraduates) at Bigelow Laboratory for Ocean Sciences and for my project I taught myself python (with the support of a post-doc) for a data analysis project. During those 10 weeks, I coded all day, every workday. From that experience, I not only acquired the hard skill of programming, but I gained a good amount of confidence in myself, and here’s why: For the first three years of my undergraduate career coding was a daunting skill that I knew I would eventually need but did not know where to start. So, I essentially ended up learning by jumping off the deep end. I found the immersion experience to be the most effective learning method for me. With coding, you find out if you got something right (or wrong) almost instantaneously. I’ve found that this is a double-edged sword. It means that you can easily have days where everything goes wrong. But, the feeling when it finally works is what I think of when I hear the term empowerment. I’m not quite sure how to put it into words, but it’s a combination of independence, confidence, and success.

Aside from learning the fundamentals, I finished that summer with confidence in my ability to teach myself not just new coding skills, but other skills as well. I think that feeling confident in my ability to learn something new has been the most helpful aspect to allow me to hit the ground running in grad school and also keeping the ‘imposter syndrome’ at bay (most of the time).

Clara’s Favorite Command: pd.groupby (python) – Say you have a column of measurements and a second column with the field site of each location. If you wanted the mean of the measurement per each location, you could use groupby to get this. It would look like this: dataframe.groupby(‘Location’)[‘Measurement’].mean().reset_index()

Karen

I’m quite new to coding, but once I started learning I was completely enchanted! I was first introduced to coding while working as a field assistant for a PhD student (a true R wizard who has since developed deep learning computer vision packages for automated camera trap image analysis) in the cloud forest of the Ecuadorian Andes. This remote jungle was where I first saw how useful coding can be for data management and analysis. It was a strange juxtaposition between being fully immersed in nature for remote field work and learning to think along the lines of coding syntax. It wasn’t the typical introduction to R most people have, but it was an effective hook. We were able to produce preliminary figures and analysis as we collected data, which made a tough field season more rewarding. Coding gave us instant results and motivation.

I committed to fully learning how to code during my first year of graduate school. I first learned linux/command line and python, and then I started working in R that following summer. My graduate research uses population genetics/genomics to better understand the migratory connections of humpback whales. This research means I spend a great deal of time working to develop bioinformatics and big data skills, an essential skill for this area of research and a goal for my career. For me, coding is a skill that only returns what you put in; you can learn to code quite quickly, if you devote the time. After a year of intense learning and struggle, I am writing better code every day.

In grad school research progress can be nebulous, but for me coding has become a concrete way to measure success. If my code ran, I have a win for the week. If not, then I have a clear place to start working the next day. These “tiny wins” are adding up, and coding has become a huge confidence boost.

Karen’s Favorite Command: grep (linux) – Searches for a string pattern and prints all lines containing a match to the screen. Grep has a variety of flags making this a versatile command I use every time I’m working in linux.

Advice

Getting Started

- Be kind to yourself, think of it as a foreign language. It takes a long time and a lot of practice.

- Once you know the fundamental concepts in any language, learning another will be easier (we promise!).

- Ask for help! The chances that you have run into a unique error are quite small, someone out there has already solved your problem, whether it’s a lab mate or another researcher you find on Google!

Coding Tips

1. Set yourself up for success by formatting your datasheets properly

- Instead of making your spreadsheet easy to read, try and think about how you want to use the data in the analysis.

- Avoid formatting (merged cells, wrap text) and spaces in headers

- Try to think ahead when formatting your spreadsheet

- Maybe chat with someone who has experience and get their advice!

2. Start with a plan, start on paper

This low-tech solution saves countless hours of code confusion. It can be especially helpful when manipulating large data frames or in multistep analysis. Drawing out the structure of your data and checking it frequently in your code (with ‘head’ in R/linux) after manipulation can keep you on track. It is easy to code yourself into circles when you don’t have a clear understanding of what you’re trying to do in each step. Or worse, you could end up with code that runs, but doesn’t conduct the analysis you intended, or needed to do.

3. Good organization and habits will get you far

There is an excellent blog by Nice R Code on project organization and file structure. I highly recommend reading and implementing their self-contained scripting suggestions. The further you get into your data analysis the more object, directory, and function names you have to remember. Develop a naming scheme that makes sense for your project (i.e. flexible, number based, etc.) and stick with it. Temporary object names in functions or code blocks can be a good way to clarify what is the code-in-progress or the code result.

4. Annotate. Then annotate some more.

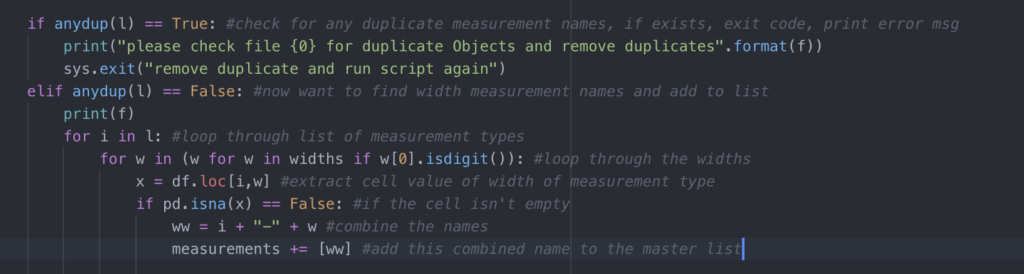

Make comments in your code so you can remember what each section or line is for. This makes debugging much easier! Annotation is also a good way to stay on track as you code, because you’ll be describing the goal of every line (remember tip 1?). If you’re following a tutorial (or STACKoverflow answer), copy the web address into your annotation so you can find it later. At the end of a coding session, make a quick note of your thought process so it’s easier to pick up when you come back. It’s also a good habit to add some ‘metadata’ details to the top of your script describing what the script is intended for, what the input files are, the expected outputs, and any other pertinent details for that script. Your future self will thank you!

5. Get with git/github already

Github is a great way to manage version control. Remember how life-changing the advent of dropbox was? This is like that, but for code! It’s also become a great open-source repository for newly developed code and packages. In addition to backing up and storing your code, GitHub has become a ‘coding CV’ that other researchers look to when hiring.

Wondering how to get started with GitHub? Check out this guide: https://guides.github.com/activities/hello-world/

Looking for a good text/code editor? Check out atom (https://atom.io/), you can push your edits straight to git from here.

6. You don’t have to learn everything, but you should probably learn the R Tidyverse ASAP

Tidyverse is a collection of data manipulation packages that make data wrangling a breeze. It also includes ggplot, an incredibly versatile data visualization package. For python users hesitant to start working in R, Tidyverse is a great place to start. The syntax will feel more familiar to python, and it has wonderful documentation online. It’s also similar to the awk/sed tools from linux, as dplyr removes any need to write loops. Loops in any language are awful, learn how to do them, and then how to avoid them.

7. Functions!

Break your code out into blocks that can be run as functions! This allows easier repetition of data analysis, in a more readable format. If you need to call your functions across multiple scripts, put them all into one ‘function.R’ script and source them in your working scripts. This approach ensures that all the scripts can access the same function, without copy and pasting it into multiple scripts. Then if you edit the function, it is changed in one place and passed to all dependent scripts.

8. Don’t take error messages personally

- Repeat after me: Everyone googles for every other line of code, everyone forgets the command some (….er every) time.

- Debugging is a lifestyle, not a task item.

- One way to make it less painful is to keep a list of fixes that you find yourself needing multiple times. And ask for help when you’re stuck!

9. Troubleshooting

- Know that you’re supposed to google but not sure what?

- start by copying and pasting the error message

- When I started it was hard to know how to phrase what I wanted, these might be some common terms

- A dataframe is the coding equivalent of a spreadsheet/table

- Do you want to combine two dataframes side by side? That’s a merge

- Do you want to stack one dataframe on top of another? That’s concatenating

- Do you want to get the average (or some other statistic) of values in a column that are all from one group or category? Check out group by or aggregate

- A loop is when you loop through every value in a column or list and do something with it (use it in an equation, use it in an if/else statement, etc).

Favorite Coding Resource (other than github….)

- Learnxinyminutes.com

- This is great ‘one stop googling’ for coding in almost any language! I frequently switch between coding languages, and as a result almost always have this open to check syntax.

- https://swirlstats.com/

- This is a really good resource for getting an introduction to R

Parting Thoughts

We hope that our stories and advice have been helpful! Like many skills, you tend to only see people once they have made it over the learning curve. But as you’ve read Karen and I both started recently and felt intimidated at the beginning. So, be patient, be kind to yourself, believe in yourself, and good luck!